In a team of four, we created Squirveillance over a period of 30 days. We worked in our spare time, with different members being available at different times.

To start on a positive note, we have A LOT to be proud of.

We can all certainly claim that we finished making a game (which is never a given during a jam).

The 30 day development period afforded us some extra time just for planning out the project, and I think we took advantage of this well. We could agree upon a number of small but significant things in advance – our git repository rules, Unity project structure, breakdown of tasks per category and per person.

Commenters were generally impressed. We enticed players with the concept and presentation. We have plenty of unique sounds and graphics, and a full script of dialogue. There’s polish where it matters. We aimed higher than plenty of other jam games, and in that way, it paid off.

Scoring 8th place in the Overall category (out of 600+ submissions!) landed us a mention on the official GitHub blog, which was very nice.

Players not progressing

That said, we had a clear problem. A lot of commenters mentioned not knowing how to progress. The majority, even.

This is an example of how one problem may be a result of several smaller ones.

Incomplete design

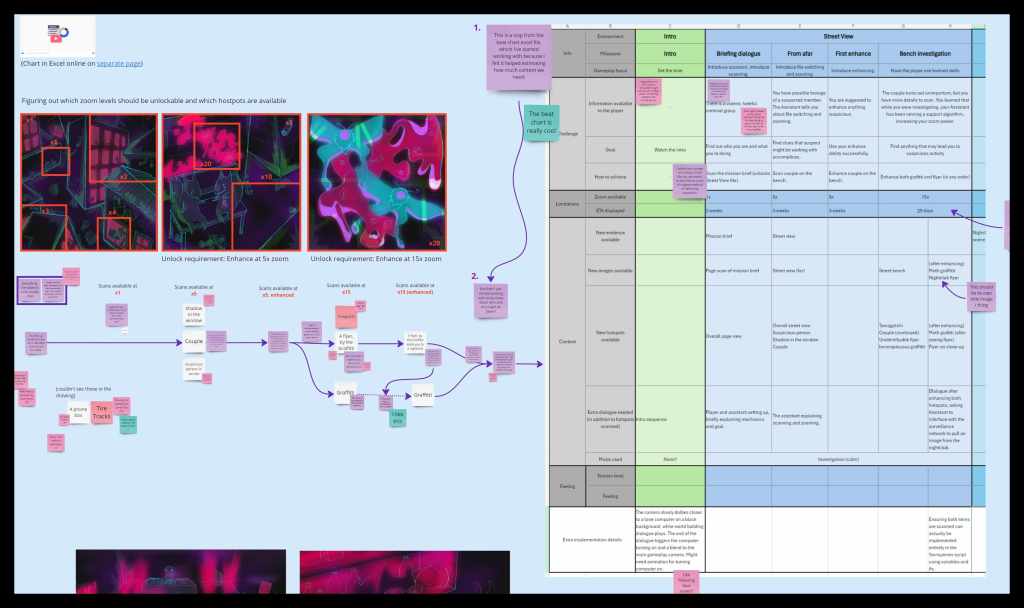

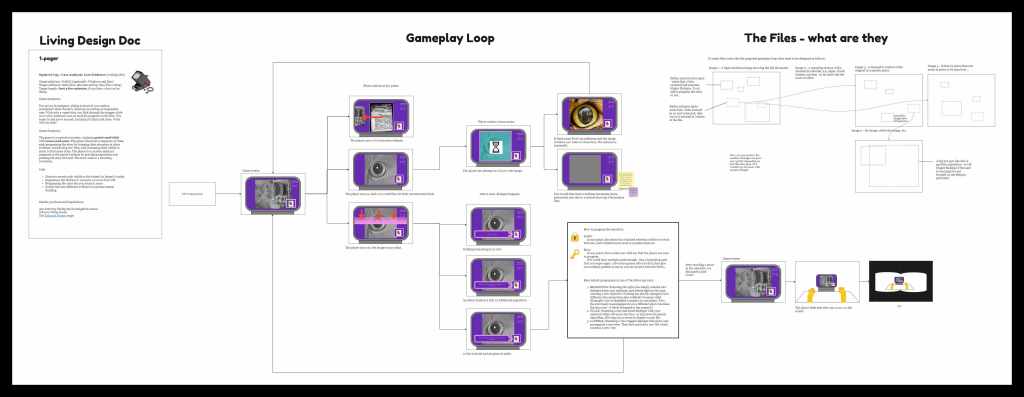

The progression of the game is primarily narrative-driven. Players will read through a fair amount of text, and (ideally) use what they’ve learned to unlock new evidence and progress until the ending. Considering this, we had relatively few resources to tackle this area. It created stress for team members. If we break down the responsibilities here, we actually have a fair amount to do:

- Write an overarching story – what is going on, who are the characters, what are their motivations and how are they pursuing their goals?

- Translate that story into a game. That means establishing the locks and keys to progression, deciding the order in which the player discovers new facts, and establishing in detail what assets we needed to have. That was a particularly big one, and the first one to receive edits at first signs of trouble.

- Write dialogue for a number of elements: the main thread of the story, any supporting dialogue, flavour text and potentially any red herrings.

- Constantly go back and forth between the three. Examine, review, and edit.

By the end of the development period, a lot the above was still open. Realising we had little time, we focused our efforts on wrapping up what we had, and creating the impression of somewhing going on. Expectations weren’t too high after all, as it was a free product for a game jam. We didn’t do any revisions.

Still, the unfortunate consequence is players can’t really rely on the story to make informed decisions on which items to scan, and which areas to enhance. The puzzles and the story don’t really synergise. One commenter pointed out that it’s nonsensical to have the first puzzle focus on a couple sitting on a bench, when there’s so much else going on in the scene, and they were right.

No playtesting

Our first playtesters were other jammers, after the submission deadline. In other words, we didn’t really playtest – this is really common for a jam, but also something I’ve been trying to move away from as the importance of playtesting really can’t be overstated. Not surprisingly, most comments mentioned not being able to progress.

And I come to this conclusion regularly, yet here we are again. How come? I think there’s a certain point at which we like to imagine the game is finished enough to start getting meaningful answers from playtests. 60% – 70%?

Here’s something to think about, though. A lot of jam-made games won’t ever reach that level of completeness, especially when compared with our initial vision of the game. Also, some feedback on an incomplete version is better than no feedback at all.

We should have done it somewhere in the middle of development, regardless of our game being not resembling the completed version.

How we responded to mitigate the issue (but not fix it)

There was a month-log voting period immediately after the jam submission period, during which we couldn’t publish any changes to the game that weren’t critical bug fixes. Still, we had some area to manouver in to steer players in the right direction.

First, we published a written walkthrough on the game page. We actually did that before anyone played the game, but we understood the importance of having one. Even if we had paid more attention to our game’s questionable progression, anyone can get stuck. We wanted everyone to be able to finish. Unfortunately, the comments mentioning inability to progress started coming in quickly.

Then, we made a video walkthrough, which seemed to help a bit. Though interestingly, we still had some people struggling. Some even mentioned watching the walkthrough, which gave us a very important bit of insight (more on that later).

After the voting period was over, I published a small update to the game, which added a new mechanic – pressing a button would reveal all scannable areas, which all but pointed the players to how to progress.

My main motivation for adding this feature after the jam was to help players reach the end of the game (as we were quite happy with the twist we put there). I consider it a band-aid solution, and ideally would have liked to go back and fix the issues proper. But I was also content with accepting the game as finished, and surely a hint system this powerful would allow everyone to progress?

Then, a new comment appeared from a player unable to progress despite using the hint system.

Are players misunderstanding the mechanics?

That’s actually very plausible. We use a number of verbs such as zoom, switch, scan and enhance as primary actions that the player must be able to use effectively to progress. Given that our idea was a bit more unusual than a typical 2D platformer, it’s easy to imagine someone confusing scanning and enhancing, and being unable to follow a video walkthrough, even when knowing where to look. Someone might also assume they’re running into a glitch and give up prematurely.

Examining this would require a more hands-on playtest with a number of people. I haven’t done this yet, but I am certainly curious enough to try at some point. Without any evidence available though, my money is on this: the mechanics are simply not explained sufficiently. We’re not letting players play around with them sufficiently, before presenting them with an area where it’s too easy to get distracted.